Welcome to Smartindia Student's Blogall posts

THE END OF COURSE.

The End of Course Test (EOCT) is an academic assessment conducted in many states of the US by the State Board of Education.[1] Georgia, for example, tests from the ninth to twelfth grades, and North Carolina tests for any of the four core class subjects (math, science, social studies, and English). It is from 9th grade to 12th grade.

North Carolina schools administer an EOCT in English II, Algebra I, and Biology I. Initially, an EOCT was administered in English I, but CMS(Charlotte-Mecklenburg Schools) made a decision to replace the EOCT in English I and administer it in English II.[2] The official purpose of the test is to assess both individual and group knowledge and skills. EOCTs are mandatory and require a minimum score for graduation eligibility. Additionally, a North Carolina student's EOCT score must account for at least 25% of the student's final grade in the relevant course.[3]

Georgia high schools are required to administer a standardized, multiple-choice EOCT, in each of eight core subjects including Algebra I, Geometry, U.S. History, Economics, Biology, Physical Science, Ninth Grade Literature and Composition, and American Literature and Composition. The official purpose of the tests is to assess "specific content knowledge and skills." Although a minimum test score is not required for the student to receive credit in the course or to graduate from high school, completion of the test is mandatory. The EOCT score comprises 20% of a student's grade in the course if in ninth grade after 2011.[4] Since the EOCT is an official, state-administered test, any violation or interference can result in the invalidation of scores of all students taking the exam on that subject. Interferences can include cellphones, mp3 players, reading books on the same subject as the exam, and talking.

OUR INDIAN INDIAN CONSTITUTION.

The Constitution of India is the supreme law of India. It lays down the framework defining fundamental political principles, establishes the structure, procedures, powers, and duties of government institutions, and sets out fundamental rights, directive principles, and the duties of citizens. It is the longest[1] written constitution of any sovereign country in the world, containing 448[Note 1][2] articles in 25[Note 2] parts, 12[Note 3] schedules, 5 appendices and 98[Note 4] amendments (out of 120[3] Constitution Amendment Bills). Besides the English version, there is an official Hindi translation. Dr. Bhimrao Ramji Ambedkar is widely regarded as the father of the Indian Constitution.

The Constitution follows parliamentary system of government and the executive is directly accountable to legislature. Article 74 provides that there shall be a Prime Minister of India as the head of government. It also states that there shall be a President of Indiaand a Vice-President of India under Articles 52 and 63. Unlike the Prime Minister, the President largely performs ceremonial roles.

The Constitution of India is federal in nature. Each State and each Union territory of India have their own government. Analogues to President and Prime Minister, the Governor in case of States, Lieutenant Governor for Union territories and the Chief Minister. The 73rd and 74th Amendment Act also introduced the system of Panchayati Raj in rural areas and Municipality in urban areas. Also,Article 370 of the Constitution gives special status to the state of Jammu and Kashmir.

The Constitution was adopted by the India Constituent Assembly on 26 November 1949, and came into effect on 26 January 1950.[4]The date of 26 January was chosen to commemorate the Purna Swaraj declaration of independence of 1930. With its adoption, theUnion of India officially became the modern and contemporary Republic of India and it replaced the Government of India Act 1935 as the country's fundamental governing document. To ensure constitutional autochthony, the framers of constitution inserted Article 395 in the constitution and by this Article the Indian Independence Act, 1947 was repealed.[5] The Constitution declares India to be asovereign, socialist, secular, democratic republic, assuring its citizens of justice, equality, and liberty, and endeavors to promotefraternity among them.[6] The words "socialist" and "secular" were added to the definition in 1976 by constitutional amendment (mini constitution).[7] India celebrates the adoption of the constitution on 26 January each year as Republic Day.[8]

Background

The major portion of the Indian subcontinent was under British rule from 1857 to 1947. The impact of economic, political and social development during this period helped the gradual rise of the Indian independence movement to gain independence from foreign rule. After the First Revolution of India, 1857, the direct rule of the British crown was established. When the Constitution of India came into force on 26 January 1950, it repealed the Indian Independence Act. India ceased to be a dominion of the British Crown and became a sovereign democratic republic. 26 November 1949 is also known as National Law Day. The Indian constitution is the world's longest constitution. At the time of commencement, the constitution had 395 articles in 22 parts and 8 schedules. It consists of almost 80,000 words and took 2 years 11 months and 18 days to build.Main article: Indian independence movement

In the United Kingdom the office of the Secretary of State for India was the authority through whom Parliament exercised its rule (along with the Council of India), and established the office of Viceroy of India (along with an Executive Council in India, consisting of high officials of the British Government). The Indian Councils Act 1861 provided for a Legislative Council consisting of the members of the Executive council and non-official members. The Indian Councils Act 1892 established provincial legislatures and increased the powers of the Legislative Council. Although these Acts increased the representation of Indians in the government, their power still remained limited. The Indian Councils Act 1909 and the Government of India Act 1919 further expanded participation of Indians in the government.

Previous Legislations as Source

The Constitution of Indian is drawn from many sources. Keeping in mind the needs and conditions of India the framers of the Constitution of India borrowed different features freely from previous legislation.

Government of India Act 1858

First Revolution of India, 1857 urged British Government to pass this Act. To calm down the after effects of 1857 revolt, the Act of 1858 was introduced. This act abolished East India Company and transferred powers towards the British crown to establish direct rule. The Provisions of the bill are:[9]

- Provision for the creation of an Indian Civil Service under the control of the Secretary of State.

- The Crown was empowered to appoint a Governor-General and the Governors of the Presidencies.

- The Company's territories in India were to be vested in the Queen, the Company ceasing to exercise its power and control over these territories. India was to be governed in the Queen's name.

- All the property of the East India Company was transferred to the Crown. The Crown also assumed the responsibilities of the Company as they related to treaties, contracts, and so forth.[10]

- The Queen's Principal Secretary of State received the powers and duties of the Company's Court of Directors. A council of fifteen members was appointed to assist the Secretary of State for India. The council became an advisory body in India affairs. For all the communications between Britain and India, the Secretary of State became the real channel.

- Abolition of double government.

Indian Councils Act 1861

Indian Councils Act 1861 enacted by Parliament of the United Kingdom that transformed the Viceroy of India's executive council into a cabinet run on the portfolio system.[11] This cabinet had six "ordinary members" who each took charge of a separate department in Calcutta's government: home, revenue, military, law, finance, and (after 1874) public works.

Indian Councils Act 1861 is an essential landmark in the constitutional and political good reputation for India. The 1861 Act restored the legislative power taken away by theCharter Act of 1833. The legislative council at Calcutta was given extensive authority to pass laws for British India as a whole, while the legislative councils at Bombay and Madras were given the power to make laws for the "Peace and good Government" of their respective presidencies.The Governor General was given the power to create new provinces for legislative purposes. He also could appoint Lt. Governors for the same.[12] Its features are:[9]

- Indians were involved with law-making process. For this purpose, viceroy nominated the Raja of Benaras, the Maharaja of Patiala and Sir Dinkar Rao.

- Decentralization of legislative powers.

- Establishment of recent legislative councils in Bengal, NWFP and Punjab in 1862, 1866 and 1897 respectively.

- Introduction of portfolio system.

- It empowered the Viceroy to issue ordinances with no concurrence of the legislative council throughout an emergency. The life of such an ordinance was 6 months.

Indian Councils Act 1892

Enacted due to the demand of the Indian National Congress to expand legislative council, the number of non-official members was increased both in central and provincial legislative councils the non official members of Indian legislative councils were henceforth to be nominated by Bengal chamber of commerce and provincial legislative council. In 1892, the council consisted of 24 members, only five being where Indians.[13] Its features are:[9]

- Power discussing budget to legislative councils.

- It deliver to the nomination of some non official people in the central legislative council through the viceroy on the recommendation of the provincial legislative councils which of the provincial legislative councils through the governors on the recommendations of the district boards, municipalities, universities, trade associations, zamindars and chambers.

Indian Councils Act 1909

Indian Councils Act 1909 commonly known as the Morley-Minto Reforms, was an Act of the Parliament of the United Kingdom that brought about a limited increase in the involvement of Indians in the governance of British India. The Act of 1909 was important for the following reasons:

- It effectively allowed the election of Indians to the various legislative councils in India for the first time. Previously some Indians had been appointed to legislative councils.

- The introduction of the electoral principle laid the groundwork for a parliamentary system even though this was contrary to the intent of Morley.

- Muslims had expressed serious concern that a first past the post electoral system, like that of Britain, would leave them permanently subject to Hindu majority rule. The Act of 1909 stipulated, as demanded by the Muslim leadership.

The Act amended the Indian Councils Acts of 1861 and 1892.[14] Its features are:[9]

- The maximum number of nominated and elected members of the Legislative Council at the Center was increased from 16 to 60. The number did not include ex-officio members.[15]

- The right of separate electorate was given to the Muslims.

- Official members were to form the majority but in provinces non-official members would be in majority.

- The members of the Legislative Councils were permitted to discuss the budgets, suggest the amendments and even to vote on them; excluding those items that were included as non-vote items. They were also entitled to ask supplementary questions during the legislative proceedings.

- The Secretary of State for India was empowered to increase the number of the Executive Councils of Madras and Bombay from two to four.

- Two Indians were nominated to the Council of the Secretary of State for Indian Affairs.

Government of India Act 1919

After World War I, the British Government opened the door for Indians to public office and employment. The Provisions of the bill are:[9]

- Relaxation of central treatments for the provinces by demarcating and separating the central and provincial subjects.

- It further divided the provincial subjects into two parts – transferred (That have been administered by governor by the help of ministers who are responsible to legislative council) and reserved (that have been to be administered by the governor and the executive council without being responsible towards the legislative council).

- Introduction of diarchy, Bicameralism, direct elections and establishment of central public service commission in 1926.

- Franchise was granted to some limited people on foundation of property, tax and education.

- Separation of central budget from provisional budget.

- Appointment of statutory commission.

Government of India Act 1935

The provisions of the Government of India Act 1935, though never implemented fully, had a great impact on the Constitution of India. Many key features of the constitution are directly taken from this Act. It is really a lengthy and detailed document having 321 sections and 10 schedules. The majority of the today's constitution has drawn from this. Its features are:[9]

- It deliver to the establishment of All India Federation. The previous names transferred and reserved subjects are changed as federal and provincial lists and concurrent list is definitely an addendum.

- Abolition of Diarchy and introduced provincial autonomy.

- Abolition of Council Asia.

- Establishment of RBI, federal court, Provincial PSUs and Joint PSUs.

- Extension of bicameralism, communal representation and franchise.

The federal structure of government, provincial autonomy, a bicameral central legislature consisting of a federal assembly and a Council of States and the separation of legislative powers between the centre and states are some of the provisions of the Act which are present in the Constitution of India.

Indian Independence Act 1947

The legislation was formulated by the government of Prime Minister Clement Attlee and the Governor General of India Lord Mountbatten, after representatives of the Indian National Congress,[16] the Muslim League,[17] and the Sikh community[18] came to an agreement with the Viceroy of India, Lord Mountbatten of Burma, on what has come to be known as the 3 June Plan or Mountbatten Plan. The Prime Minister of the United Kingdom announced on 20 February 1947 that:

- British Government would grant full self-government to British India by June 1948 at the latest,

- Partition of India and Pakistan.

- The future of Princely States would be decided after the date of final transfer is decided.[19]

- Empowering of Constitution for the nations.

On 18 July 1947, British India divided into two new independent states, India and Pakistan, which were to be dominions under the Commonwealth of Nations until they had each finished drafting and enacted a new constitution. The Constituent Assembly was divided into two for the separate states, with each new Assembly having sovereign powers transferred to it for the respective dominion. The Act also terminated British suzerainty over the princely states, each of which was left to decide whether to accede to one or other of the new dominions or to continue as independent states in their own right.

Constituent Assembly

The Constitution was drafted by the Constituent Assembly, which was elected by the elected members of the provincial assemblies.[20] Dr B.R. Ambedkar, Sanjay Phakey,Jawaharlal Nehru, C. Rajagopalachari, Rajendra Prasad, Sardar Vallabhbhai Patel, Kanaiyalal Munshi, Purushottam Mavalankar, Sandipkumar Patel, Maulana Abul Kalam Azad,Shyama Prasad Mukherjee, Nalini Ranjan Ghosh, and Balwantrai Mehta were some important figures in the Assembly. There were more than 30 members of the scheduled classes. Frank Anthony represented the Anglo-Indian community, and the Parsis were represented by H. P. Modi. The Chairman of the Minorities Committee was Harendra Coomar Mookerjee, a distinguished Christian who represented all Christians other than Anglo-Indians. Ari Bahadur Gururng represented the Gorkha Community. Prominent jurists like Alladi Krishnaswamy Iyer, Benegal Narsing Rau and K. M. Munshi, Ganesh Mavlankar were also members of the Assembly. Sarojini Naidu, Hansa Mehta, Durgabai Deshmukh,Rajkumari Amrit Kaur and Vijayalakshmi Pandit were important women members.

The first temporary 2-day president of the Constituent Assembly was Dr Sachidanand Sinha. Later, Rajendra Prasad was elected president of the Constituent Assembly.[20] The members of the Constituent Assembly met for the first time on 9 December 1946.[20]

Drafting On the 14 August 1947 meeting of the Assembly, a proposal for forming various committees was presented.[20] Such committees included a Committee on Fundamental Rights, the Union Powers Committee and Union Constitution Committee. On 29 August 1947, the Drafting Committee was appointed, with Dr B. R. Ambedkar as the Chairman along with six other members assisted by a constitutional advisor. These members were Pandit Govind Ballabh Pant Kanaiyalal Maneklal Munshi (K M Munshi, Ex- Home Minister, Bombay), Alladi Krishnaswamy Iyer (Ex- Advocate General, Madras State), N Gopalaswami Ayengar (Ex-Prime Minister, J&K and later member of Nehru Cabinet), B L Mitter (Ex-Advocate General, India), Md. Saadullah (Ex- Chief Minister of Assam, Muslim League member) and D P Khaitan (Scion of Khaitan Business family and a renowned lawyer). The constitutional advisor was Sir Benegal Narsing Rau (who became First Indian Judge in International Court of Justice, 1950–54). Later B L Mitter resigned and was replaced by Madhav Rao (Legal Advisor of Maharaja of Vadodara). Owing to death of D P Khaitan, T T Krishnamachari was chosen to be included in the drafting committee. A Draft Constitution was prepared by the committee and submitted to the Assembly on 4 November 1947. Draft constitution was debated and over 2000 amendments were moved over a period of two years. Finally on 26 Nov. 1949, the process was completed and Constituent assembly adopted the constitution. 284 members signed the document and the process of constitution making was complete.[21]

The Assembly met in sessions open to the public, for 166 days, spread over a period of 2 years, 11 months and 18 days before adopting the Constitution, the 308 members of the Assembly signed two copies of the document (one each in Hindi and English) on 24 January 1950. The original Constitution of India is hand-written with beautiful calligraphy, each page beautified and decorated by artists from Shantiniketan including Beohar Rammanohar Sinha and Nandalal Bose. Two days later, on 26 January 1950, the Constitution of India became the law of all the States and territories of India. Rs.1,00,00,000 was official estimate of expenditure on constituent assembly. The Constitution has undergone many amendments since its enactment.[22]

ONLINE LEARNING.

Online machine learning is a model of induction that learns one instance at a time. The goal in on-line learning is to predict labels for instances. For example, the instances could describe the current conditions of the stock market, and an online algorithm predicts tomorrow's value of a particular stock. The key defining characteristic of on-line learning is that soon after the prediction is made, the true label of the instance is discovered. This information can then be used to refine the prediction hypothesis used by the algorithm. The goal of the algorithm is to make predictions that are close to the true labels.

More formally, an online algorithm proceeds in a sequence of trials. Each trial can be decomposed into three steps. First the algorithm receives an instance. Second the algorithm predicts the label of the instance. Third the algorithm receives the true label of the instance.[1] The third stage is the most crucial as the algorithm can use this label feedback to update its hypothesis for future trials. The goal of the algorithm is to minimize some performance criteria. For example, with stock market prediction the algorithm may attempt to minimize sum of the square distances between the predicted and true value of a stock. Another popular performance criterion is to minimize the number of mistakes when dealing with classification problems. In addition to applications of a sequential nature, online learning algorithms are also relevant in applications with huge amounts of data such that traditional learning approaches that use the entire data set in aggregate are computationally infeasible.

Because on-line learning algorithms continually receive label feedback, the algorithms are able to adapt and learn in difficult situations. Many online algorithms can give strong guarantees on performance even when the instances are not generated by a distribution. As long as a reasonably good classifier exists, the online algorithm will learn to predict correct labels. This good classifier must come from a previously determined set that depends on the algorithm. For example, two popular on-line algorithms perceptron and winnow can perform well when a hyperplane exists that splits the data into two categories. These algorithms can even be modified to do provably well even if the hyperplane is allowed to infrequently change during the on-line learning trials.

Unfortunately, the main difficulty of on-line learning is also a result of the requirement for continual label feedback. For many problems it is not possible to guarantee that accurate label feedback will be available in the near future. For example, when designing a system that learns how to do optical character recognition, typically some expert must label previous instances to help train the algorithm. In actual use of the OCR application, the expert is no longer available and no inexpensive outside source of accurate labels is available. Fortunately, there is a large class of problems where label feedback is always available. For any problem that consists of predicting the future, an on-line learning algorithm just needs to wait for the label to become available. This is true in our previous example of stock market prediction and many other problems.

A prototypical online supervised learning algorithm

In the setting of supervised learning, or learning from examples, we are interested in learning a function  , where

, where  is thought of as a space of inputs and

is thought of as a space of inputs and  as a space of outputs, that predicts well on instances that are drawn from a joint probability distribution

as a space of outputs, that predicts well on instances that are drawn from a joint probability distribution  on

on  . In this setting, we are given a loss function

. In this setting, we are given a loss function  , such that

, such that  measures the difference between the predicted value

measures the difference between the predicted value  and the true value

and the true value  . The ideal goal is to select a function

. The ideal goal is to select a function  , where

, where  is a space of functions called a hypothesis space, so as to minimize the expected risk:

is a space of functions called a hypothesis space, so as to minimize the expected risk:

In reality, the learner never knows the true distribution  over instances. Instead, the learner usually has access to a training set of examples

over instances. Instead, the learner usually has access to a training set of examples  that are assumed to have been drawn i.i.d. from the true distribution

that are assumed to have been drawn i.i.d. from the true distribution  . A common paradigm in this situation is to estimate a function

. A common paradigm in this situation is to estimate a function  through empirical risk minimization or regularized empirical risk minimization (usually Tikhonov regularization). The choice of loss function here gives rise to several well-known learning algorithms such as regularized least squares and support vector machines.

through empirical risk minimization or regularized empirical risk minimization (usually Tikhonov regularization). The choice of loss function here gives rise to several well-known learning algorithms such as regularized least squares and support vector machines.

The above paradigm is not well-suited to the online learning setting though, as it requires complete a priori knowledge of the entire training set. In the pure online learning approach, the learning algorithm should update a sequence of functions  in a way such that the function

in a way such that the function  depends only on the previous function

depends only on the previous function  and the next data point

and the next data point  . This approach has low memory requirements in the sense that it only requires storage of a representation of the current function

. This approach has low memory requirements in the sense that it only requires storage of a representation of the current function  and the next data point

and the next data point  . A related approach that has larger memory requirements allows

. A related approach that has larger memory requirements allows  to depend on

to depend on  and all previous data points

and all previous data points  . We focus solely on the former approach here, and we consider both the case where the data is coming from an infinite stream

. We focus solely on the former approach here, and we consider both the case where the data is coming from an infinite stream  and the case where the data is coming from a finite training set

and the case where the data is coming from a finite training set  , in which case the online learning algorithm may make multiple passes through the data.

, in which case the online learning algorithm may make multiple passes through the data.

The algorithm and its interpretations[edit]

Here we outline a prototypical online learning algorithm in the supervised learning setting and we discuss several interpretations of this algorithm. For simplicity, consider the case where  ,

,  , and

, and  is the set of all linear functionals from

is the set of all linear functionals from  into

into  , i.e. we are working with a linear kernel and functions

, i.e. we are working with a linear kernel and functions  can be identified with vectors

can be identified with vectors  . Furthermore, assume that

. Furthermore, assume that  is a convex, differentiable loss function. An online learning algorithm satisfying the low memory property discussed above consists of the following iteration:

is a convex, differentiable loss function. An online learning algorithm satisfying the low memory property discussed above consists of the following iteration:

where  ,

,  is the gradient of the loss for the next data point

is the gradient of the loss for the next data point  evaluated at the current linear functional

evaluated at the current linear functional  , and

, and  is a step-size parameter. In the case of an infinite stream of data, one can run this iteration, in principle, forever, and in the case of a finite but large set of data, one can consider a single pass or multiple passes (epochs) through the data.

is a step-size parameter. In the case of an infinite stream of data, one can run this iteration, in principle, forever, and in the case of a finite but large set of data, one can consider a single pass or multiple passes (epochs) through the data.

Interestingly enough, the above simple iterative online learning algorithm has three distinct interpretations, each of which has distinct implications about the predictive quality of the sequence of functions  . The first interpretation considers the above iteration as an instance of the stochastic gradient descent method applied to the problem of minimizing the expected risk

. The first interpretation considers the above iteration as an instance of the stochastic gradient descent method applied to the problem of minimizing the expected risk ![I[w]](http://upload.wikimedia.org/math/9/4/0/9408c23ce3d3e3da2c12ff0f451453c7.png) defined above. [2] Indeed, in the case of an infinite stream of data, since the examples

defined above. [2] Indeed, in the case of an infinite stream of data, since the examples  are assumed to be drawn i.i.d. from the distribution

are assumed to be drawn i.i.d. from the distribution  , the sequence of gradients of

, the sequence of gradients of  in the above iteration are an i.i.d. sample of stochastic estimates of the gradient of the expected risk

in the above iteration are an i.i.d. sample of stochastic estimates of the gradient of the expected risk ![I[w]](http://upload.wikimedia.org/math/9/4/0/9408c23ce3d3e3da2c12ff0f451453c7.png) and therefore one can apply complexity results for the stochastic gradient descent method to bound the deviation

and therefore one can apply complexity results for the stochastic gradient descent method to bound the deviation ![I[w_t] - I[w^\ast]](http://upload.wikimedia.org/math/2/2/6/22603906cb4a251e1d54814641d1541b.png) , where

, where  is the minimizer of

is the minimizer of ![I[w]](http://upload.wikimedia.org/math/9/4/0/9408c23ce3d3e3da2c12ff0f451453c7.png) . [3] This interpretation is also valid in the case of a finite training set; although with multiple passes through the data the gradients are no longer independent, still complexity results can be obtained in special cases.

. [3] This interpretation is also valid in the case of a finite training set; although with multiple passes through the data the gradients are no longer independent, still complexity results can be obtained in special cases.

The second interpretation applies to the case of a finite training set and considers the above recursion as an instance of the incremental gradient descent method[4] to minimize the empirical risk:

Since the gradients of  in the above iteration are also stochastic estimates of the gradient of

in the above iteration are also stochastic estimates of the gradient of ![I_n[w]](http://upload.wikimedia.org/math/5/4/d/54d900f70f330b955bd353b5655bd790.png) , this interpretation is also related to the stochastic gradient descent method, but applied to minimize the empirical risk as opposed to the expected risk. Since this interpretation concerns the empirical risk and not the expected risk, multiple passes through the data are readily allowed and actually lead to tighter bounds on the deviations

, this interpretation is also related to the stochastic gradient descent method, but applied to minimize the empirical risk as opposed to the expected risk. Since this interpretation concerns the empirical risk and not the expected risk, multiple passes through the data are readily allowed and actually lead to tighter bounds on the deviations ![I_n[w_t] - I_n[w^\ast_n]](http://upload.wikimedia.org/math/6/f/5/6f52c1a158399bcfc9c9b42e3cb3be63.png) , where

, where  is the minimizer of

is the minimizer of ![I_n[w]](http://upload.wikimedia.org/math/5/4/d/54d900f70f330b955bd353b5655bd790.png) .

.

The third interpretation of the above recursion is distinctly different from the first two and concerns the case of sequential trials discussed above, where the data are potentially not i.i.d. and can perhaps be selected in an adversarial manner. At each step of this process, the learner is given an input  and makes a prediction based on the current linear function

and makes a prediction based on the current linear function  . Only after making this prediction does the learner see the true label

. Only after making this prediction does the learner see the true label  , at which point the learner is allowed to update

, at which point the learner is allowed to update  to

to  . Since we are not making any distributional assumptions about the data, the goal here is to perform as well as if we could view the entire sequence of examples ahead of time; that is, we would like the sequence of functions

. Since we are not making any distributional assumptions about the data, the goal here is to perform as well as if we could view the entire sequence of examples ahead of time; that is, we would like the sequence of functions  to have low regret relative to any vector

to have low regret relative to any vector :

:

In this setting, the above recursion can be considered as an instance of the online gradient descent method for which there are complexity bounds that guarantee  regret.

regret.

It should be noted that although the three interpretations of this algorithm yield complexity bounds in three distinct settings, each bound depends on the choice of step-size sequence  in a different way, and thus we cannot simultaneously apply the consequences of all three interpretations; we must instead select the step-size sequence in a way that is tailored for the interpretation that is most relevant. Furthermore, the above algorithm and these interpretations can be extended to the case of a nonlinear kernel by simply considering

in a different way, and thus we cannot simultaneously apply the consequences of all three interpretations; we must instead select the step-size sequence in a way that is tailored for the interpretation that is most relevant. Furthermore, the above algorithm and these interpretations can be extended to the case of a nonlinear kernel by simply considering  to be the feature space associated with the kernel. Although in this case the memory requirements at each iteration are no longer

to be the feature space associated with the kernel. Although in this case the memory requirements at each iteration are no longer  , but are rather on the order of the number of data points considered so far.

, but are rather on the order of the number of data points considered so far.

IMPORTENTS OF COMPUTER..

A computer is a general purpose device that can be programmed to carry out a set of arithmetic or logical operations. Since a sequence of operations can be readily changed, the computer can solve more than one kind of problem.

Conventionally, a computer consists of at least one processing element, typically a central processing unit (CPU), and some form ofmemory. The processing element carries out arithmetic and logic operations, and a sequencing and control unit can change the order of operations in response to stored information. Peripheral devices allow information to be retrieved from an external source, and the result of operations saved and retrieved.

In World War II, mechanical analog computers were used for specialized military applications. During this time the first electronicdigital computers were developed. Originally they were the size of a large room, consuming as much power as several hundred modernpersonal computers (PCs).

Modern computers based on integrated circuits are millions to billions of times more capable than the early machines, and occupy a fraction of the space.[2] Simple computers are small enough to fit into mobile devices, and mobile computers can be powered by smallbatteries. Personal computers in their various forms are icons of the Information Age and are what most people think of as “computers.” However, the embedded computers found in many devices from MP3 players to fighter aircraft and from toys to industrial robots are the most numerous.

Etymology

The first use of the word “computer” was recorded in 1613 in a book called “The yong mans gleanings” by English writer Richard Braithwait I haue read the truest computer of Times, and the best Arithmetician that euer breathed, and he reduceth thy dayes into a short number. It referred to a person who carried out calculations, or computations, and the word continued with the same meaning until the middle of the 20th century. From the end of the 19th century the word began to take on its more familiar meaning, a machine that carries out computations.

History

Rudimentary calculating devices first appeared in antiquity and mechanical calculating aids were invented in the 17th century. The first recorded use of the word "computer" is also from the 17th century, applied to human computers, people who performed calculations, often as employment. The first computer devices were conceived of in the 19th century, and only emerged in their modern form in the 1940s.

First general-purpose computing device

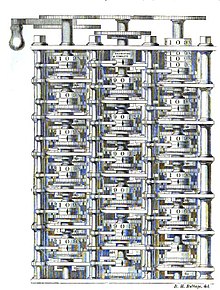

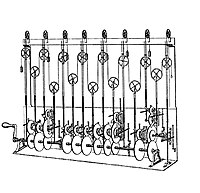

Charles Babbage, an English mechanical engineer and polymath, originated the concept of a programmable computer. Considered the "father of the computer", he conceptualized and invented the first mechanical computer in the early 19th century. After working on his revolutionary difference engine, designed to aid in navigational calculations, in 1833 he realized that a much more general design, an Analytical Engine, was possible. The input of programs and data was to be provided to the machine via punched cards, a method being used at the time to direct mechanical looms such as theJacquard loom. For output, the machine would have a printer, a curve plotter and a bell. The machine would also be able to punch numbers onto cards to be read in later. The Engine incorporated an arithmetic logic unit, control flow in the form of conditional branching and loops, and integrated memory, making it the first design for a general-purpose computer that could be described in modern terms as Turing-complete.

The machine was about a century ahead of its time. All the parts for his machine had to be made by hand - this was a major problem for a device with thousands of parts. Eventually, the project was dissolved with the decision of the British Government to cease funding. Babbage's failure to complete the analytical engine can be chiefly attributed to difficulties not only of politics and financing, but also to his desire to develop an increasingly sophisticated computer and to move ahead faster than anyone else could follow. Nevertheless his son, Henry Babbage, completed a simplified version of the analytical engine's computing unit (the mill) in 1888. He gave a successful demonstration of its use in computing tables in 1906.

Analog computers

During the first half of the 20th century, many scientific computing needs were met by increasingly sophisticatedanalog computers, which used a direct mechanical or electrical model of the problem as a basis for computation. However, these were not programmable and generally lacked the versatility and accuracy of modern digital computers.

The first modern analog computer was a tide-predicting machine, invented by Sir William Thomson in 1872. Thedifferential analyser, a mechanical analog computer designed to solve differential equations by integration using wheel-and-disc mechanisms, was conceptualized in 1876 by James Thomson, the brother of the more famous Lord Kelvin.

The art of mechanical analog computing reached its zenith with the differential analyzer, built by H. L. Hazen and Vannevar Bush at MIT starting in 1927. This built on the mechanical integrators of James Thomson and the torque amplifiers invented by H. W. Nieman. A dozen of these devices were built before their obsolescence became obvious.

The modern computer

The principle of the modern computer was first described by computer scientist Alan Turing, who set out the idea in his seminal 1936 paper, On Computable Numbers. Turing reformulated Kurt Gödel's 1931 results on the limits of proof and computation, replacing Gödel's universal arithmetic-based formal language with the formal and simple hypothetical devices that became known as Turing machines. He proved that some such machine would be capable of performing any conceivable mathematical computation if it were representable as an algorithm. He went on to prove that there was no solution to the Entscheidungsproblem by first showing that the halting problem for Turing machines is undecidable: in general, it is not possible to decide algorithmically whether a given Turing machine will ever halt.

He also introduced the notion of a 'Universal Machine' (now known as a Universal Turing machine), with the idea that such a machine could perform the tasks of any other machine, or in other words, it is provably capable of computing anything that is computable by executing a program stored on tape, allowing the machine to be programmable. Von Neumann acknowledged that the central concept of the modern computer was due to this paper. Turing machines are to this day a central object of study in theory of computation. Except for the limitations imposed by their finite memory stores, modern computers are said to be Turing-complete, which is to say, they have algorithm execution capability equivalent to a universal Turing machine.

Electromechanical computers

Early digital computers were electromechanical - electric switches drove mechanical relays to perform the calculation. These devices had a low operating speed and were eventually superseded by much faster all-electric computers, originally using vacuum tubes. The Z2, created by German engineer Konrad Zuse in 1939, was one of the earliest examples of an electromechanical relay computer.

In 1941, Zuse followed his earlier machine up with the Z3, the world's first working electromechanical programmable, fully automatic digital computer.The Z3 was built with 2000 relays, implementing a 22 bit word length that operated at a clock frequency of about 5–10 Hz.Program code and data were stored on punched film. It was quite similar to modern machines in some respects, pioneering numerous advances such as floating point numbers. Replacement of the hard-to-implement decimal system (used in Charles Babbage's earlier design) by the simpler binary system meant that Zuse's machines were easier to build and potentially more reliable, given the technologies available at that time. The Z3 was probably a complete Turing machine.

Electronic programmable computer

Purely electronic circuit elements soon replaced their mechanical and electromechanical equivalents, at the same time that digital calculation replaced analog. The engineer Tommy Flowers, working at the Post Office Research Station in Dollis Hill in the 1930s, began to explore the possible use of electronics for the telephone exchange. Experimental equipment that he built in 1934 went into operation 5 years later, converting a portion of the telephone exchange network into an electronic data processing system, using thousands of vacuum tubes. In the US, John Vincent Atanasoff and Clifford E. Berry of Iowa State University developed and tested the Atanasoff–Berry Computer (ABC) in 1942, the first "automatic electronic digital computer". This design was also all-electronic and used about 300 vacuum tubes, with capacitors fixed in a mechanically rotating drum for memory.

During World War II, the British at Bletchley Park achieved a number of successes at breaking encrypted German military communications. The German encryption machine, Enigma, was first attacked with the help of the electro-mechanical bombes. To crack the more sophisticated GermanLorenz SZ 40/42 machine, used for high-level Army communications, Max Newman and his colleagues commissioned Flowers to build the Colossus.He spent eleven months from early February 1943 designing and building the first Colossus. After a functional test in December 1943, Colossus was shipped to Bletchley Park, where it was delivered on 18 January 1944 and attacked its first message on 5 February.

Colossus was the world's first electronic digital programmable computer. It used a large number of valves (vacuum tubes). It had paper-tape input and was capable of being configured to perform a variety of boolean logical operations on its data, but it was not Turing-complete. Nine Mk II Colossi were built (The Mk I was converted to a Mk II making ten machines in total). Colossus Mark I contained 1500 thermionic valves (tubes), but Mark II with 2400 valves, was both 5 times faster and simpler to operate than Mark 1, greatly speeding the decoding process.

The US-built ENIAC (Electronic Numerical Integrator and Computer) was the first electronic programmable computer built in the US. Although the ENIAC was similar to the Colossus it was much faster and more flexible. It was unambiguously a Turing-complete device and could compute any problem that would fit into its memory. Like the Colossus, a "program" on the ENIAC was defined by the states of its patch cables and switches, a far cry from the stored program electronic machines that came later. Once a program was written, it had to be mechanically set into the machine with manual resetting of plugs and switches.

It combined the high speed of electronics with the ability to be programmed for many complex problems. It could add or subtract 5000 times a second, a thousand times faster than any other machine. It also had modules to multiply, divide, and square root. High speed memory was limited to 20 words (about 80 bytes). Built under the direction of John Mauchly and J. Presper Eckert at the University of Pennsylvania, ENIAC's development and construction lasted from 1943 to full operation at the end of 1945. The machine was huge, weighing 30 tons, using 200 kilowatts of electric power and contained over 18,000 vacuum tubes, 1,500 relays, and hundreds of thousands of resistors, capacitors, and inductors.

Stored program computer

Early computing machines had fixed programs. Changing its function required the re-wiring and re-structuring of the machine. With the proposal of the stored-program computer this changed. A stored-program computer includes by design an instruction set and can store in memory a set of instructions (a program) that details the computation. The theoretical basis for the stored-program computer was laid by Alan Turing in his 1936 paper. In 1945 Turing joined the National Physical Laboratory and began work on developing an electronic stored-program digital computer. His 1945 report ‘Proposed Electronic Calculator’ was the first specification for such a device. John von Neumann at the University of Pennsylvania, also circulated his First Draft of a Report on the EDVAC in 1945.

The Manchester Small-Scale Experimental Machine, nicknamed Baby, was the world's first stored-program computer. It was built at the Victoria University of Manchester by Frederic C. Williams, Tom Kilburn and Geoff Tootill, and ran its first program on 21 June 1948. It was designed as a testbed for the Williams tube the firstrandom-access digital storage device.Although the computer was considered "small and primitive" by the standards of its time, it was the first working machine to contain all of the elements essential to a modern electronic computer. As soon as the SSEM had demonstrated the feasibility of its design, a project was initiated at the university to develop it into a more usable computer, the Manchester Mark 1.

The Mark 1 in turn quickly became the prototype for the Ferranti Mark 1, the world's first commercially available general-purpose computer.Built by Ferranti, it was delivered to the University of Manchester in February 1951. At least seven of these later machines were delivered between 1953 and 1957, one of them toShell labs in Amsterdam. In October 1947, the directors of British catering company J. Lyons & Company decided to take an active role in promoting the commercial development of computers. The LEO I computer became operational in April 1951 and ran the world's first regular routine office computer job.

Transistor computers

The bipolar transistor was invented in 1947. From 1955 onwards transistors replaced vacuum tubes in computer designs, giving rise to the "second generation" of computers. Compared to vacuum tubes, transistors have many advantages: they are smaller, and require less power than vacuum tubes, so give off less heat. Silicon junction transistors were much more reliable than vacuum tubes and had longer, indefinite, service life. Transistorized computers could contain tens of thousands of binary logic circuits in a relatively compact space.

At the University of Manchester, a team under the leadership of Tom Kilburn designed and built a machine using the newly developed transistors instead of valves.[31] Their first transistorised computer and the first in the world, was operational by 1953, and a second version was completed there in April 1955. However, the machine did make use of valves to generate its 125 kHz clock waveforms and in the circuitry to read and write on its magnetic drum memory, so it was not the first completely transistorized computer. That distinction goes to the Harwell CADET of 1955,[32] built by the electronics division of the Atomic Energy Research Establishment at Harwell.[33][34]

The integrated circuit

The next great advance in computing power came with the advent of the integrated circuit. The idea of the integrated circuit was first conceived by a radar scientist working for the Royal Radar Establishment of the Ministry of Defence, Geoffrey W.A. Dummer. Dummer presented the first public description of an integrated circuit at the Symposium on Progress in Quality Electronic Components in Washington, D.C. on 7 May 1952.[35]

The first practical ICs were invented by Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor.[36] Kilby recorded his initial ideas concerning the integrated circuit in July 1958, successfully demonstrating the first working integrated example on 12 September 1958.[37] In his patent application of 6 February 1959, Kilby described his new device as “a body of semiconductor material ... wherein all the components of the electronic circuit are completely integrated.”[38][39] Noyce also came up with his own idea of an integrated circuit half a year later than Kilby.[40] His chip solved many practical problems that Kilby's had not. Produced at Fairchild Semiconductor, it was made of silicon, whereas Kilby's chip was made of germanium.

This new development heralded an explosion in the commercial and personal use of computers and led to the invention of the microprocessor. While the subject of exactly which device was the first microprocessor is contentious, partly due to lack of agreement on the exact definition of the term "microprocessor", it is largely undisputed that the first single-chip microprocessor was the Intel 4004,[41] designed and realized by Ted Hoff, Federico Faggin, and Stanley Mazor at Intel.[42]

Programs

The defining feature of modern computers which distinguishes them from all other machines is that they can be programmed. That is to say that some type of instructions (the program) can be given to the computer, and it will process them. Modern computers based on the von Neumann architecture often have machine code in the form of an imperative programming language.

In practical terms, a computer program may be just a few instructions or extend to many millions of instructions, as do the programs for word processors and web browsers for example. A typical modern computer can execute billions of instructions per second (gigaflops) and rarely makes a mistake over many years of operation. Large computer programs consisting of several million instructions may take teams of programmers years to write, and due to the complexity of the task almost certainly contain errors.

Stored program architecture

This section applies to most common RAM machine-based computers.

In most cases, computer instructions are simple: add one number to another, move some data from one location to another, send a message to some external device, etc. These instructions are read from the computer's memory and are generally carried out (executed) in the order they were given. However, there are usually specialized instructions to tell the computer to jump ahead or backwards to some other place in the program and to carry on executing from there. These are called “jump” instructions (or branches). Furthermore, jump instructions may be made to happen conditionally so that different sequences of instructions may be used depending on the result of some previous calculation or some external event. Many computers directly support subroutines by providing a type of jump that “remembers” the location it jumped from and another instruction to return to the instruction following that jump instruction.

Program execution might be likened to reading a book. While a person will normally read each word and line in sequence, they may at times jump back to an earlier place in the text or skip sections that are not of interest. Similarly, a computer may sometimes go back and repeat the instructions in some section of the program over and over again until some internal condition is met. This is called the flow of control within the program and it is what allows the computer to perform tasks repeatedly without human intervention.

Comparatively, a person using a pocket calculator can perform a basic arithmetic operation such as adding two numbers with just a few button presses. But to add together all of the numbers from 1 to 1,000 would take thousands of button presses and a lot of time, with a near certainty of making a mistake. On the other hand, a computer may be programmed to do this with just a few simple instructions.

THE SCHOOL DISIPLINE.

In its natural sense, discipline is systematic instruction intended to train a person, sometimes literally called a disciple, in a craft, trade or other activity, or to follow a particular code of conduct or "order". Often, the phrase "to discipline" carries a negative connotation. This is because enforcement of order–that is, ensuring instructions are carried out–is often regulated through punishment.

Discipline is a course of actions leading to a greater goal than the satisfaction of the immediate. A disciplined person is one that has established a goal and is willing to achieve that goal at the expense of his or her immediate comfort.

Discipline is the assertion of willpower over more base desires, and is usually understood to be synonymous with self control. Self-discipline is to some extent a substitute for motivation, when one uses reason to determine the best course of action that opposes one's desires. Virtuousbehavior is when one's motivations are aligned with one's reasoned aims: to do what one knows is best and to do it gladly. Continent behavior, on the other hand, is when one does what one knows is best, but must do it by opposing one's motivations.[1] Moving from continent to virtuous behavior requires training and some self-discipline.

School discipline is the system of rules, punishments, and behavioral strategies appropriate to the regulation of children or adolescents and the maintenance of order in schools. Its aim is to control the students' actions and behavior.

An obedient student is in compliance with the school rules and codes of conduct. These rules may, for example, define the expected standards ofclothing, timekeeping, social conduct, and work ethic. The term discipline is also applied to the punishment that is the consequence of breaking the rules. The aim of discipline is to set limits restricting certain behaviors or attitudes that are seen as harmful or going against school policies, educational norms, school traditions, et cetera.

Theory.

School discipline practices are generally informed by theory from psychologists and educators. There are a number of theories to form a comprehensive discipline strategy for an entire school or a particular class.

- Positive approach is grounded in teachers' respect for students. Instills in students a sense of responsibility by using youth/adult partnerships to develop and share clear rules, provide daily opportunities for success, and administer in-school suspension for noncompliant students. Based on Glasser's Reality Therapy. Research (e.g., Allen) is generally supportive of the PAD program.

- Teacher effectiveness training differentiates between teacher-owned and student-owned problems, and proposes different strategies for dealing with each. Students are taught problem-solving and negotiation techniques. Researchers (e.g., Emmer and Aussiker) find that teachers like the programme and that their behaviour is influenced by it, but effects on student behaviour are unclear.

- Adlerian approaches is an umbrella term for a variety of methods which emphasize understanding the individual's reasons for maladaptive behavior and helping misbehaving students to alter their behavior, while at the same time finding ways to get their needs met. Named for psychiatrist Alfred Adler. These approaches have shown some positive effects on self-concept, attitudes, and locus of control, but effects on behavior are inconclusive (Emmer and Aussiker).Not only were the statistics on suspensions and vandalism significant, but also the recorded interview of teachers demonstrates the improvement in student attitude and behaviour, school atmosphere, academic performance, and beyond that, personal and professional growth.

- Appropriate school learning theory and educational philosophy is a strategy for preventing violence and promoting order and discipline in schools, put forward by educational philosopher Daniel Greenberg and practised by the Sudbury Valley School.

Corporal punishment

Throughout the history of education the most common means of maintaining discipline in schools was corporal punishment. While a child was in school, a teacher was expected to act as a substitute parent, with many forms of parental discipline or rewards open to them. This often meant that students were commonly chastised with the birch, cane, paddle, strap or yardstick if they did something wrong.

Corporal punishment in schools has now disappeared from most Western countries, including all European countries. Thirty-one U.S. states as well as the District of Columbia have banned it, most recently New Mexico in 2011. The other nineteen states (mostly in the South) continue to allow corporal punishment in schools. Paddling is still used to a significant (though declining) degree in some public schools in Alabama, Arkansas, Georgia, Louisiana, Mississippi, Oklahoma, Tennessee and Texas. Private schools in these and most other states may also use it, though many choose not to do so.

Official corporal punishment, often by caning, remains commonplace in schools in some Asian, African and Caribbean countries.

Most mainstream schools in most other countries retain punishment for misbehaviour, but it usually takes non-corporeal forms such as detention and suspension.

Detention.

Detention is one of the most common punishments in schools in the United States, Britain, Ireland, Singapore, Canada, Australia, South Africa and some other countries. It requires the pupil to go to a designated area of the school during a specified time on a school day (typically either break or after school), but also may require a pupil to go to that part of school at a certain time on a non-school day, e.g. "Saturday detention" at some US, UK and Irish schools (especially for serious offenses not quite serious enough for suspension).

In the UK, the Education Act 1997 obliges a (state) school to give parents or guardians at least 24 hours' notice of a detention outside school hours so arrangements for transport and/or childcare can be made. This should say why it was given and, more importantly, how long it will last (Detentions usually last from as short as 10 minutes or less to as long as 5 hours or more).[7]

Typically, in schools in the US, UK and Singapore, if one misses a detention, then another is added or the student gets a more serious punishment. In UK schools, for offenses too serious for a normal detention but not serious enough for a detention requiring the pupil to return to school at a certain time on a non-school day, a detention can require a pupil to return to school 1-2 hours after school ends on a school day, e.g. "Friday Night Detention".

Suspension

Suspension or temporary exclusion is mandatory leave assigned to a student as a form of punishment that can last anywhere from one day to a few weeks, during which time the student is not allowed to attend regular lessons. In some US, Australian and Canadian schools, there are two types of suspension: In-School Suspension (ISS) and Out-of-School Suspension (OSS). In-school suspension requires the student to report to school as usual but sit in one room all day. Out-of-school suspension bans the student from being on school grounds The student's parents/guardians are notified of the reason for and duration of the out-of-school suspension, and usually also for in-school suspensions. Sometimes students have to complete work during their suspensions, for which they receive no credit.(OSS only) In some UK schools, there is Reverse Suspension as well as normal suspension. A pupil suspended is sent home for a period of time set. A pupil reverse suspended is required to be at school during the holidays. Some pupils often have to complete work while reverse suspended.

Expulsion

Expulsion, exclusion, withdrawing or permanent exclusion bans the student from being on school grounds permanently. This is the ultimate last resort, when all other methods of discipline have failed. However, in extreme situations, it may also be used for a single offense.[9] Some education authorities have a nominated school in which all excluded students are collected; this typically has a much higher staffing level than mainstream schools. In some US public schools, expulsions and exclusions are so serious that they require an appearance before the Board of Education or the court system. In the UK, head teachers may make the decision to exclude, but the student's parents have the right of appeal to the local education authority. This has proved controversial in cases where the head teacher's decision has been overturned (and his or her authority thereby undermined), and there are proposals to abolish the right of appeal

Expulsion from a private school is a more straightforward matter, since the school can merely terminate its contract with the parents if the pupil does not have siblings in the same school.

"MOHANDAS KARAMCHAND GANDHI"

Who Was Mohandas Karamchand Gandhi?

Mohandas Gandhi is considered the father of the Indian independence movement. Gandhi spent 20 years in South Africa working to fight discrimination. It was there that he created his concept of satyagraha, a non-violent way of protesting against injustices. While in India, Gandhi's obvious virtue, simplistic lifestyle, and minimal dress endeared him to the people. He spent his remaining years working diligently to both remove British rule from India as well as to better the lives of India's poorest classes. Many civil rights leaders, including Martin Luther King Jr., used Gandhi's concept of non-violent protest as a model for their own struggles.Dates:

October 2, 1869 - January 30, 1948

Also Known As:

Mohandas Karamchand Gandhi, Mahatma ("Great Soul"), Father of the Nation, Bapu ("Father"), Gandhiji

Gandhi's Childhood

Mohandas Gandhi was the last child of his father (Karamchand Gandhi) and his father's fourth wife (Putlibai). During his youth, Mohandas Gandhi was shy, soft-spoken, and only a mediocre student at school. Although generally an obedient child, at one point Gandhi experimented with eating meat, smoking, and a small amount of stealing -- all of which he later regretted. At age 13, Gandhi married Kasturba (also spelled Kasturbai) in an arranged marriage. Kasturba bore Gandhi four sons and supported Gandhi's endeavors until her death in 1944.Off to London

In September 1888, at age 18, Gandhi left India, without his wife and newborn son, in order to study to become a barrister (lawyer) in London. Attempting to fit into English society, Gandhi spent his first three months in London attempting to make himself into an English gentleman by buying new suits, fine-tuning his English accent, learning French, and taking violin and dance lessons. After three months of these expensive endeavors, Gandhi decided they were a waste of time and money. He then cancelled all of these classes and spent the remainder of his three-year stay in London being a serious student and living a very simple lifestyle.In addition to learning to live a very simple and frugal lifestyle, Gandhi discovered his life-long passion for vegetarianism while in England. Although most of the other Indian students ate meat while they were in England, Gandhi was determined not to do so, in part because he had vowed to his mother that he would stay a vegetarian. In his search for vegetarian restaurants, Gandhi found and joined the London Vegetarian Society. The Society consisted of an intellectual crowd who introduced Gandhi to different authors, such as Henry David Thoreau and Leo Tolstoy. It was also through members of the Society that Gandhi began to really read theBhagavad Gita, an epic poem which is considered a sacred text to Hindus. The new ideas and concepts that he learned from these books set the foundation for his later beliefs.

Gandhi successfully passed the bar on June 10, 1891 and sailed back to India two days later. For the next two years, Gandhi attempted to practice law in India. Unfortunately, Gandhi found that he lacked both knowledge of Indian law and self-confidence at trial. When he was offered a year-long position to take a case in South Africa, he was thankful for the opportunity.

Gandhi Arrives in South Africa

At age 23, Gandhi once again left his family behind and set off for South Africa, arriving in British-governed Natal in May 1893. Although Gandhi was hoping to earn a little bit of money and to learn more about law, it was in South Africa that Gandhi transformed from a very quiet and shy man to a resilient and potent leader against discrimination. The beginning of this transformation occurred during a business trip taken shortly after his arrival in South Africa.Gandhi had only been in South Africa for about a week when he was asked to take the long trip from Natal to the capital of the Dutch-governed Transvaal province of South Africa for his case. It was to be a several day trip, including transportation by train and by stagecoach. When Gandhi boarded the first train of his journey at the Pietermartizburg station, railroad officials told Gandhi that he needed to transfer to the third-class passenger car. When Gandhi, who was holding first-class passenger tickets, refused to move, a policeman came and threw him off the train.

That was not the last of the injustices Gandhi suffered on this trip. As Gandhi talked to other Indians in South Africa (derogatorily called "coolies"), he found that his experiences were most definitely not isolated incidents but rather, these types of situations were common. During that first night of his trip, sitting in the cold of the railroad station after being thrown off the train, Gandhi contemplated whether he should go back home to India or to fight the discrimination. After much thought, Gandhi decided that he could not let these injustices continue and that he was going to fight to change these discriminatory practices.

Gandhi, the Reformer

Gandhi spent the next twenty years working to better Indians' rights in South Africa. During the first three years, Gandhi learned more about Indian grievances, studied the law, wrote letters to officials, and organized petitions. On May 22, 1894, Gandhi established the Natal Indian Congress (NIC). Although the NIC began as an organization for wealthy Indians, Gandhi worked diligently to expand its membership to all classes and castes. Gandhi became well-known for his activism and his acts were even covered by newspapers in England and India. In a few short years, Gandhi had become a leader of the Indian community in South Africa.In 1896, after living three years in South Africa, Gandhi sailed to India with the intention of bringing his wife and two sons back with him. While in India, there was a bubonic plague outbreak. Since it was then believed that poor sanitation was the cause of the spread of the plague, Gandhi offered to help inspect latrines and offer suggestions for better sanitation. Although others were willing to inspect the latrines of the wealthy, Gandhi personally inspected the latrines of the untouchables as well as the rich. He found that it was the wealthy that had the worst sanitation problems.

''THE PI DAY''......

Pi Day is an annual celebration commemorating the mathematical constant ? (pi). Pi Day is observed on March 14 (or 3/14 in the U.S. month/daydate format), since 3, 1, and 4 are the three most significant digits of ? in the decimal form. In 2009, the United States House of Representativessupported the designation of Pi Day.

In the year 2015, Pi Day will have special significance on 3/14/15 at 9:26:53 a.m. and p.m., with the date and time representing the first 10 digits of?.

Pi Approximation Day is observed on July 22 (or 22/7 in the day/month date format), since the fraction 22?7 is a common approximation of ?.

History

The earliest known official or large-scale celebration of Pi Day was organized by Larry Shaw in 1988 at the San Francisco Exploratorium where Shaw worked as a physicist, with staff and public marching around one of its circular spaces, then consuming fruit pies The Exploratorium continues to hold Pi Day celebrations.

On March 12, 2009, the U.S. House of Representatives passed a non-binding resolution (HRES 224), recognizing March 14, 2009, as National Pi Day

For Pi Day 2010, Google presented a Google Doodle celebrating the holiday, with the word Google laid over images of circles and pi symbols

Observance

Pi Day has been observed in many ways, including eating pie, throwing pies and discussing the significance of the number ?. Some schools hold competitions as to which student can recall Pi to the highest number of decimal places

The Massachusetts Institute of Technology (MIT) has often mailed its application decision letters to prospective students for delivery on Pi Day.Starting in 2012, MIT has announced it will post those decisions (privately) online on Pi Day at exactly 6:28 pm, which they have called "Tau Time", to honor the rival numbers Pi and Tau equally

The town of Princeton, New Jersey, hosts numerous events in a combined celebration of Pi Day and Albert Einstein's birthday, which is also March 14. Einstein lived in Princeton for more than twenty years while working at the Institute for Advanced Study. In addition to pie eating and recitation contests, there is an annual Einstein look-alike contest

THE SARVODAYA DAY

Sarvodaya (Devanagari: ???????, Gujarati: ???????) is a term meaning 'universal uplift' or 'progress of all'. The term was first coined by Mahatma Gandhi as the title of his 1908 translation of John Ruskin's tract on political economy, Unto This Last, and Gandhi came to use the term for the ideal of his own political philosophy.[1] Later Gandhians, like the Indian nonviolence activist Vinoba Bhave, embraced the term as a name for the social movement in post-independence India which strove to ensure that self-determination and equality reached all strata of Indian society.

Contents

[hide]Origins and Gandhi's political ideal[edit]

Gandhi received a copy of Ruskin's Unto This Last from a British friend, Mr. Henry Polak, while working as a lawyer in South Africa in 1904. In his Autobiography, Gandhi remembers the twenty-four hour train ride to Durban (from when he first read the book), being so in the grip of Ruskin's ideas that he could not sleep at all: "I determined to change my life in accordance with the ideals of the book."[2] As Gandhi construed it, Ruskin's outlook on political-economic life extended from three central tenets:

| “ |

The first of these I knew. The second I had dimly realized. The third had never occurred to me. Unto This Last made it clear as daylight for me that the second and third were contained in the first. I arose with the dawn, ready to reduce these principles to practice.[2] | ” |

Four years later, in 1908, Gandhi rendered a paraphrased translation of Ruskin's book into his native tongue of Gujarati. He entitled the book Sarvodaya, a compound (sam?sa) he invented from two Sanskrit roots: sarva (all) and udaya (uplift) -- "the uplift of all" (or as Gandhi glossed it in his autobiography, "the welfare of all").

Although inspired by Ruskin, the term would for Gandhi come to stand for a political ideal of his own stamp. (Indeed Gandhi was keen to distance himself from Ruskin's more conservative ideas.)[3] The ideal which Gandhi strove to put into practice in his ashrams was, he hoped, one that he could persuade the whole of India to embrace, becoming a light to the other nations of the world. The Gandhian social ideal encompassed the dignity of labor, an equitable distribution of wealth, communal self-sufficiency and individual freedom.[4]

Sarvodaya movement[edit]

Gandhi's ideals have lasted well beyond the achievement of one of his chief projects, Indian independence (swaraj). His followers in India (notably, Vinoba Bhave) continued working to promote the kind of society that he envisioned, and their efforts have come to be known as the Sarvodaya Movement. Anima Bose has referred to the movement's philosophy as "a fuller and richer concept of people's democracy than any we have yet known." Sarvodaya workers associated with Vinoba, [[J. P. Narayan]], Dada Dharmadhikari, Dhirendra Mazumdaar, Shankarrao Deo, K. G. Mashruwala undertook various projects aimed at encouraging popular self-organisation during the 1950s and 1960s, including Bhoodan and Gramdan movements. Many groups descended from these networks continue to function locally in India today.

Beginning on the one year anniversary of the immersion of Gandhi's ashes, an annual Sarvodaya mela or festival has been held at Srirangapatna[5] and at Tirunavaya. At the latter site, it was instituted by K. Kelappan (Kelappaji)

A IDEAL STUDENT.....

A Student who knows that studying is not a process of reaching the Goal.

But studying is itself a Goal, a Student who knows that studying itself

is important than the goal is an Ideal Student.

Yes Friends, I am going to talk about an Ideal Student.

As Lord Krishna says that Knowledge is itself the reward. True Knowledge

is the only barometer against which we can measure an Ideal Student.

What is this world without True Knowledge? A Suffering.

When the rays of Sun fall on earth the shadows disappear. Similarly when

true knowledge is acquired then the Suffering ceases. But True

Knowledge is only available for an Ideal student. That is the reason why

in our India, Students were first prepared morally and then accepted by

Gurus.

When this student who has been illuminated by true knowledge from a true

Guru walks on this earth then the earths dances with joy to touch his

feet, the trees swing in joy to see him, the worlds shake in respect.

ROBERT JAMES LEE HAWKE

Robert James Lee Hawke AC GCL (born 9 December 1929) is an Australian politician who was the 23rd Prime Minister of Australia and the Leader of the Labor Party from 1983 to 1991. After a decade as President of the Australian Council of Trade Unions, he was elected to the House of Representatives as the Labor MP for Wills in 1980. Three years later, he led Labor to a landslide election victory and was sworn in as Prime Minister. He led Labor to victory at three more elections in 1984, 1987 and 1990, thus making him the most successful Labor Leader in history. Hawke was eventually replaced by Paul Keating at the end of 1991. He remains to date Labor's longest-serving Prime Minister, and is Australia's third-longest-serving Prime Minister.

Contents

[hide]Early life and education[edit]

Hawke was born in Bordertown, South Australia to Clem, a Congregationalist minister, and his wife Ellie. His uncle, Albert, was the Labor Premier of Western Australia between 1953 and 1959, and was also a close friend of Prime Minister John Curtin, who was in many ways Bob Hawke's role model. Ellie Hawke had an almost messianic belief in her son's destiny, and this contributed to his supreme self-confidence throughout his career.[1]Both his parents were of Cornish origin, and he himself has stated that his background is Cornish.[2][3] This led the Cornish writer and historian A.L. Rowse to write, "Bob Hawke's characteristics are as Cornish as Australian. I know them well; the aggressive individualism, the egoism, the touchiness, the liability to resentment, even a touch of vindictiveness."[4] While attending the 1952 World Christian Youth Conference in Kottayam,Southern India, Hawke was struck by "this enormous sense of irrelevance of religion to the needs of the people". He subsequently abandoned hisChristian beliefs.[5] By the time he entered politics he was a self-described agnostic.[6] Hawke told Andrew Denton in 2008 that his father's Christian faith continued to influence his outlook however: "[My father] said if you believe in the fatherhood of God you must necessarily believe in the brotherhood of man, it follows necessarily, and even though I left the church and was not religious, that truth remained with me."[7]

Hawke was educated at Perth Modern School and the University of Western Australia where he graduated with a Bachelor of Arts and Bachelor of Laws. At the age 15, he accurately boasted that he would one day become Prime Minister of Australia.[8] He joined the Labor Party in 1947 at the age of 18, and successfully applied for a Rhodes Scholarship at the end of 1952.[9][10] In 1953, Hawke attended University College, Oxford to commence aBachelor of Arts in Philosophy, Politics and Economics (PPE).[11] He soon found he was covering much the same ground as his did in his education at the University of Western Australia. Hawke then transferred to a Bachelor of Letters, with a thesis on wage-fixing in Australia which was successfully presented in January 1956.[12]